经常被百度撸羊毛,这段时间把丢了python拿起来继续学习ING

为了练手看到了百度的全民视频是一个非常不错的练手对象...这不就搞了一下,发现非常不错。

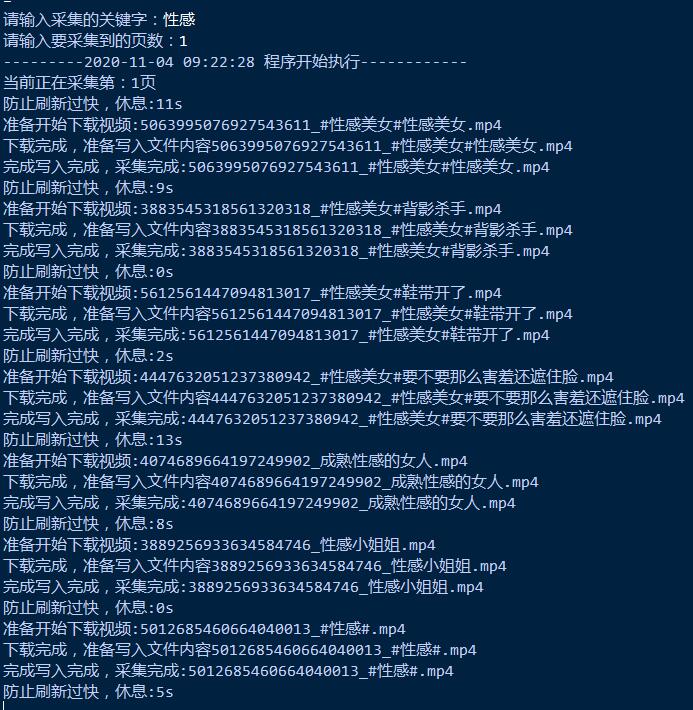

自定义输入要采集的关键字,自定义要采集的页数,很方便哦。很多小视频,很多福利哈。

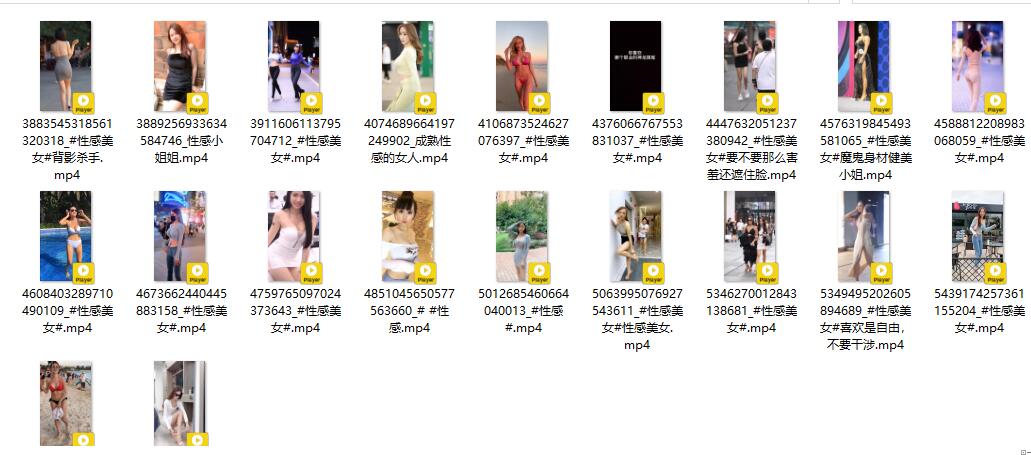

所有视频按照时间放在download目录下,data.txt文件是为了防止重复保存已经采集的视频。

代码如下,相关需要安装的模块自行安装哈。

update:

2020-11-4 16:11 感谢大佬的发现,方法里少带了个变量,导致很多视频无法保存,已经修改,请从新更新下代码。

#!/usr/bin/python3

#-*-coding:UTF-8-*-

#风之翼灵

#www.fungj.com

#全民视频 https://quanmin.baidu.com/

import urllib.request

import re

import requests

from bs4 import BeautifulSoup

import time

import os

import json

import random

import emoji

from requests.exceptions import ConnectionError, Timeout, HTTPError, RetryError

from urllib3.util.retry import Retry

from urllib3.exceptions import MaxRetryError

from requests.adapters import HTTPAdapter

DEFAULT_HEADERS = {

'Accept-Language': 'zh-CN,zh;q=0.9,en;q=0.8,zh-TW;q=0.7',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36',

'Accept': '*/*',

'Referer': 'https://quanmin.baidu.com/',

}

PROXY = {

# 'http': 'socks5://127.0.0.1:1180',

# 'https': 'socks5://127.0.0.1:1180'

}

SKEY = urllib.parse.quote(str(input('请输入采集的关键字:')).rstrip())

SNUM = str(input('请输入要采集到的页数:')).rstrip()

def make_session():

s = requests.Session()

retries = Retry(total=3, backoff_factor=0.5,

method_whitelist=['HEAD', 'GET', 'POST', 'PUT'],

status_forcelist=[413, 429, 500, 502, 503, 504])

s.mount('https://', HTTPAdapter(max_retries=retries))

s.mount('http://', HTTPAdapter(max_retries=retries))

s.headers.update(DEFAULT_HEADERS)

s.proxies = PROXY

# s.verify = False

return s

def __init__(self):

self._session = requests.session()

self._session.headers.update(DEFAULT_HEADERS)

self._session.headers['Cookie'] = ';'.join(f'{k}={v}' for k, v in COOKIES.items())

def main():

i = 1

pmun = 0

while pmun <= int(SNUM):

#采集20页

pmun += i

print('当前正在采集第:'+str(pmun)+'页')

url = 'https://quanmin.baidu.com/wise/growth/api/home/searchmorelist?rn=12&pn='+str(pmun)+'&q='+SKEY+'&type=search&_format=json'

#打开URL并获取页面内容

req = urllib.request.Request(url, headers=DEFAULT_HEADERS)

resp = urllib.request.urlopen(req).read().decode('UTF-8')

#内容JSON转换

json_str = json.loads(resp)

#读取状态

errno = json_str['errno']

errmsg = json_str['errmsg']

if errmsg == '成功' and errno == 0:

#正常执行,获取列表

vdata = json_str['data']['list']['video_list']

for ii in vdata:

#获取信息

vid = ii['vid']

title = ii['title']

#去掉HTML标签

pattern = re.compile(r'<[^>]+>',re.S)

title = pattern.sub('', title)

emoji_str = emoji.demojize(title)

title = re.sub(r':(.*?):','',emoji_str).strip() #清洗后的数据

play_url = ii['play_url']

#查询本地文件是否已经下载过

path = os.getcwd()+'/'+'data.txt'

try:

f = open(path,'r',encoding="utf-8").read()

except IOError:

f = open(path,'w',encoding="utf-8")

f.close()

f = []

#读取内容没有的话追加内容

vainfo = vid+'/'+title

vastr = vainfo in f

if vastr == True:

#已经有了

print(vid+','+title + '已经存在,跳过采集....')

continue

else:

#没有的下载采集,下载视频

tt = random.randint(0,14)

print('防止刷新过快,休息:'+str(tt)+'s')

time.sleep(tt)

download_play(vid,title,play_url,path,vainfo)

#下载完成后写入文件以防重复采集

else:

print('接口内容读取失败....')

exit()

def download_play(vid,title,play_url,path,vainfo):

#创建视频的下载目录

nowtime = time.strftime("%Y%m%d%H", time.localtime())

fpath = os.getcwd()+'/'+'download/'+nowtime+'/'

isExists=os.path.exists(fpath)

if not isExists:

os.makedirs(fpath)

#下载视频到目录

try:

r = requests.get(play_url, stream=True)

vname =str(vid)+'_'+title+'.mp4'

print('准备开始下载视频:'+vname)

with open(fpath+vname, "wb") as mp4:

for chunk in r.iter_content(chunk_size=1024 * 1024):

if chunk:

mp4.write(chunk)

#下载完成,写入文件

print('下载完成,准备写入文件内容'+vname)

savefile(path,vainfo)

print('完成写入完成,采集完成:'+vname)

except:

print(vname+'无法保存,跳过')

savefile(path,vainfo)

def savefile(path,vainfo):

my_open = open(path, 'a',encoding="utf-8")

my_open.write(vainfo+'\n')

my_open.close()

#执行方法

nowtime = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

print('---------' + str(nowtime) +' 程序开始执行------------')

if __name__ == '__main__':

session = make_session()

main()